The Ultimate Containerization Guide: Docker, Kubernetes and More

Containers are for everyone. Learn how to start with them When working with software, countless companies have bemoaned the fact that the virtual machines needed to run different parts of it increase their costs considerably. Recently, many have started using a method called "Containerization," which bundles the application together with all of its related configuration files, libraries, and dependencies. So why are huge companies, like IBM and Google, getting so excited over this approach? Simply put, containerization helps companies reduce overhead costs, makes the software more portable, and allows it to be scaled much more easily.

In this article, we’ll go over:

- Why container technology is becoming so popular

- A brief history of containerization

- An overview of Docker and Kubernetes

- Who should use containers

- The best container solutions

Containerization vs. Virtualization

Many people try to put containerization against virtualization as if they’re completely different approaches, which isn’t exactly the best way to look at things. Containerization actually solved all the shortcoming of full virtualization, which is why it’s gained favor from developers across the world.

So, to get started, let’s take a look at a few differences between the two technologies:

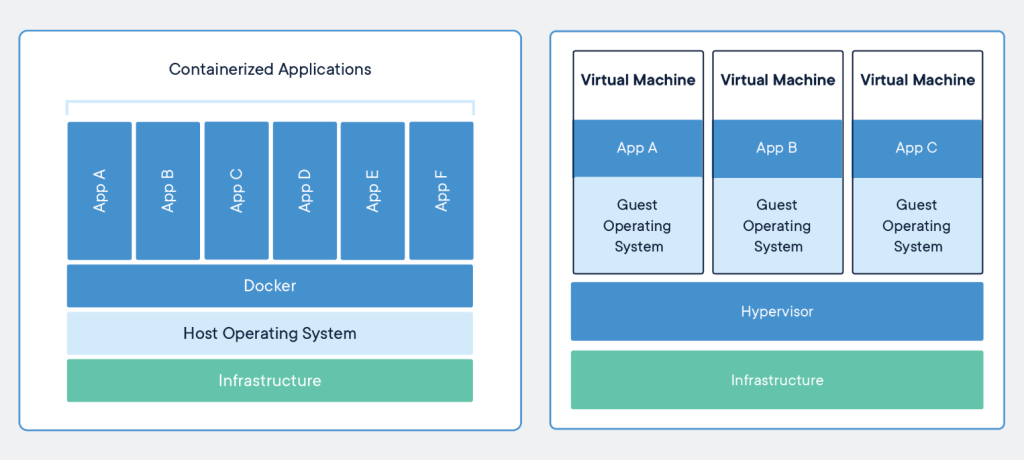

Virtualization

Once a software component called hypervisor is installed on the server, you can create virtual machines (VMs), which act as separate servers running their own operating systems. This approach usually ends up costing a lot of money since hypervisor and all of the VMs can often take up around 20% of a server’s performance.

Containerization

Containerization is virtualization of the OS kernel. While containers are running isolated processes, they’re sharing a common OS, binaries, and libraries. This doesn’t use nearly as much space and memory as the traditional VM, which reduces overhead costs considerably.

Containers are much much quicker to create and activate because, unlike VMs, you don’t need to install an operating system every time you set one up. Implementation is also much easier since containers are isolated from their surrounding environment, making deploying them in a different environment much simpler.

Containerization vs. Virtualization. Source: Docker

History of containers: 1970-now

Many of us first started hearing about containers in 2013 when an up-and-coming open-source software called Docker appeared and brought containers into the public sphere. But, the idea of containers is actually much older than that.

Back in the 70s, the operating system UNIX came out with a “chroot” (change root) command, which allowed users to isolate file systems.

20 years later, FreeBSD modified this idea to create a jail command, which let admins divide computer systems into smaller systems (or “jails”).

In 2005, Solaris OS created containers that isolated applications so that they could run independently without potentially affecting each other.

One year later, Google engineers created cgroups (control groups) technology, which was later absorbed into the Linux kernel, which then led to the emergence of the “light-weight” virtualization LXC.

But, all of these solutions still needed people with extensive knowledge to manually configure them, so they didn’t gain much traction among experts.

Docker was the first to solve this issue in 2013. They used Linux containers, added more functionalities (like portable container images), and made them easier to manage.

And just like that, Docker’s containers became almost an overnight success.

Want your own container?

Na virtuálních serverech od Masteru s virtualizací KVM kontejnery sami spustíte a může si vyzkoušet, jak s nimi pracovat.

Docker: #1 in container technology

While there are a few options out there to choose from, Dockers is, without a doubt, the most widespread container technology out there today. Today, 83% of all containers run on Docker. But what is Dockers?

Dockers is a software that allows users to encapsulate apps, libraries, configurational files, binaries and other dependent files into a container so that they can be run in any environment without running into any issues.

But Docker doesn’t stop there, the software also manages the container, from creation to the end of its lifecycle.

While it is important to mention that there are other container technologies that exist (LXC, Open-VZ, CoreOS, etc.), they aren’t as well known as Docker because they require experts to set them up and maintain them.

Docker Terms You Should Know

V souvislosti s Dockerem často narazíte na celou řadu pojmů. Co přesně znamenají?

- Dockerfile – textový soubor s instrukcemi k vytvoření Docker image. Specifikuje operační systém, na kterém bude běžet kontejner, jazyky, lokace, porty a další komponenty.

- Docker image – komprimovaná, samostatná část softwaru vytvořená příkazy v Dockerfile. Je to v podstatě šablona (aplikace plus požadované knihovny a binární soubory) potřebná k vytvoření a spuštění Docker kontejneru. Images mohou být použité ke sdílení kontejnerizovaných aplikací.

- Docker run – příkaz, který spouští kontejnery. Každý kontejner je instancí jednoho image.

- Docker Hub – úložiště pro sdílení a management kontejnerů, kde najdete oficiální Docker images z open-source projektů i neoficiální images veřejnosti. Je ale možné pracovat i s lokálními Docker úložišti.

- Docker Engine – jádro Dockeru, technologie na principu klient-server, která vytváří a provozuje kontejnery.

Kubernetes and other orchestrators

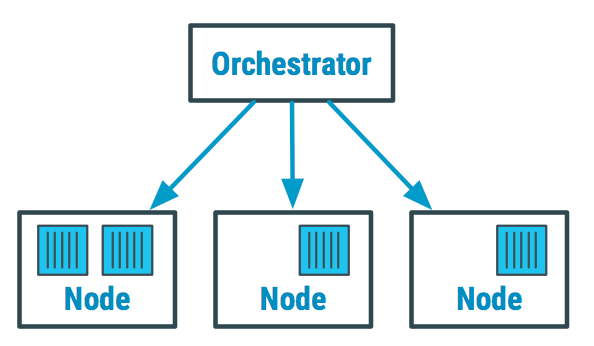

Managing containers manually on a single server is tough enough, but what if your containers are spread across hundreds of servers? Impossible, right? Not for an orchestrator like Kubernetes.

An orchestrator is a tool that manages container networks, including the launching, maintaining, and scaling of containerized applications.

Orchestrators have made the lives of many people significantly easier, especially microservices developers. They carry out tasks like making sure that server capacity is being used efficiently, launches services based on needs, stop old container versions and activates new ones, and more.

Orchestrator is software above a container network. Source: Devopedia

There are quite a few different container orchestrator tools out there, but, just as with container technologies, there’s one that stands out from the pack–Kubernetes, an open-source orchestrator with a wide variety of options that can be customized to fit your needs.

To show you why Kubernetes is the clear winner, let’s take a look at some alternatives.

Docker Swarm

Docker’s orchestrator, Docker Swarm, is more simple than Kubernetes, but it was designed primarily for smaller container clusters. While Kubernetes takes a bit more work to deploy, it’s a much more comprehensive Docker-approved solution that gives you an easy to manage and resilient application infrastructure.

Mesos

Mesos, an Apache project, isn’t just an orchestration tool, it’s essentially a cloud OS that coordinates both containerized and non-containerized components. Many platforms can run simultaneously in Mesos, including Kuberenetes.

Microservices and Containers

Kontejnerové technologie bývají často zmiňovány v souvislosti s microservices. O co se jedná?

Microservices představují způsob vývoje a provozování aplikací rozdělených na menší moduly, které spolu komunikují prostřednictvím API. Na rozdíl od velkých monolitických aplikací, jsou microservices snadné na údržbu, protože změna v jednom modulu se neprojeví v ostatních částech.

Koncept microservices začal vznikat nezávisle na vývoji kontejnerů, ale právě díky kontejnerům je možné architekturu microservices v praxi efektivně využívat. Vývoj microservices v kontejnerech umožňují orchestrátory, které zvládají management obrovského množství kontejnerů a umožňují například i rolling update (při nasazení nových verzí aplikace udržuje repliky obou verzí pro případný rollback na předešlou verzi).

Can containers help me?

Now that we know that using containers can have quite a few benefits, but you might be wondering if this technology can help you.

To make the answer simple: anyone who works with software can benefit from using container technology.

Let’s just take a look at a few ways containers can help you:

- Containers make software super portable.

- Since the software is enclosed in a container, it can run on any machine without issue.

- It’s more secure. Isolated software won’t have a negative impact on other systems.

- Easily scalable software. Whether the number of users is increasing or decreasing, containers allow you to immediately react.

Making Scaling Easy

Škálování s kontejnery je velmi rychlé (mnohem rychlejší než vznik nového virtuálního stroje) a je tak možné reagovat okamžitě na velké výkyvy ve využívání aplikace. Kontejnery zároveň umožňují lépe využít zdroje serveru tím, že je aplikace rozdělená na menší části. Navíc můžeme některé mikroslužby naškálovat vícekrát, některé jen jednou, podle potřeby.

Transition to containers

While it is possible to encapsulate a giant monolithic application in one container, you won’t get all the benefits that containers have to offer. Containers use server’s resources most efficiently when apps are divided into smaller parts (especially since microservices might need to be scaled many times, and other portions might only need to be scaled once).

There are a few ways you can break down monolithic applications, but unfortunately, no universal method exists. The more data dependencies there are, the more difficult it is to divide.

Which container tool is best for me?

Since both Docker and Kubernetes are open-source projects, you can start running containers on your own. But, keep in mind that transitioning large projects to containers can be a massive undertaking. If you don’t have the time or expertise to do it yourself, we recommend reaching out to an organization to set your solution up for you.

In Master DC, we offer a few types of container setups you can choose from:

- Virtual servers (VPS) with KVM virtualization — allows the user to install Docker and run own their containers

- Managed Kubernetes cluster running on physical servers — ideal for larger projects since the Kuberenetes orchestrator requires at least 2-3 servers to operate

- Managed Kubernetes cluster running completely on the cloud — ideal for smaller projects

- Hybrid managed Kubernetes cluster — orchestrator runs in the cloud, containers run on dedicated physical servers

The future of containers

Everyone knows that containers are going to be influential in the technology of the near future, and now that large players like Google and IBM are placing their bets on container tools, we don’t see this going away anytime soon.

In 2019, Turbomic report has shown that 26 % of IT companies have already started to use containerized applications, and that number is expected to double by 2021.

Some companies have started using containers outside of servers. Linux recently launched Flatpak, a container-based software utility for software deployment and package management. With Flatpak, people can use applications that are isolated from the rest of the system, which strengthens security, makes updating easier, and discourages wasting resources collecting unnecessary data.

Team Silverview also went all-in with container technology when they created Fedora, an experimental OS that distributes all software into containers.

Want to make sure your company isn’t left behind? At Master DC, we can work with you to determine which container solution best fits your needs, and make the transition as smooth as possible.