MasterDC Data Centre Facilities and Technologies

Last Update 6/9/2023

This document contains a description of the physical security of MasterDC data centres and the available racks, including information on access and options for importing technology into the data centres. It also provides details on connectivity, power, and cooling.

Physical Security of the Data Centres

Entrance to the data centres is guarded by 24-hour physical security. Members of the MasterDC support team are also present on-site at all times. In addition, a certified electronic security system and online monitoring CCTV with recording ensure the detection of all people entering and moving around the data centre buildings. In addition, PIR sensors warn of any suspicious movement.

Anyone who needs to enter a data centre does so only when accompanied by an authorised employee, who must identify themselves at the two-zone entrance using a multimodal RFID/PIN reader and biometric authentication (i.e., by bloodstream pattern). Furthermore, the bloodstream database is encrypted and cannot be accessed by anyone unauthorised. In the case of an unexpected disaster and failure of the electronic systems, the data centres are also equipped with a conventional key lock, which is safely stored.

Even though all individual devices and components in the data centres are monitored, customers can use rack cabinets with a unique key or a cage for a higher level of physical security of their devices.

Fire Safety

The data centres have a built-in automated fire protection system with smoke and heat detectors. In addition, the extinguishing system is gas-based. We use GHZ KD-200 fire extinguishers with environmentally friendly FM200 gas (HFC-227ea, pressure 42 bar), which is completely harmless and environmentally friendly to servers and the environment.

Data Centres

| Data Centre Prague | Data Centre Brno | |

| Total Area | 850 m2 | 750 m2 |

| Expansion Possibilities | +350 m2 | +500 m2 |

| Location | Prague Vrsovice | Brno stred |

| Connection Capacity | N x 10 Gbit Ethernet | N x 10 Gbit Ethernet |

| Direct Connection to Other DCs | CeColo, THP, MasterDC Brno | CeColo, MasterDC Praha |

| Air Conditioning | Stulz, circuit 3-1, power backup N+1 | Vertiv, circuit 1(2)-1, power backup N+1 |

Racks

Space in Rittal racks with a volume up to 47 U, or smaller 10 U, and a width of 19” are available for rent. The depth of the rack is always a minimum of 100 cm. However, servers need to be supplied with their own skids and rails for installation to the rack. In addition, MasterDC provides UTP and power cables for the server. Eight IEC 320 C13 female sockets per one circuit breaker are standard.

Rack Housing at DC Prague

Full Racks

- Rittal TS IT 47U 600 x 2200 x 1000

- Rittal TS IT 47U 600 x 2200 x 1200

- Rittal TS IT 47U 800 x 2200 x 1000

- Rittal TS IT 47U 800 x 2200 x 1200

Half Rack

- Rittal TS IT 2 x 24U 600 x 2200 x 1000

Quarter Rack

- Rittal TS IT 4 x 10U 600 x 2200 x 1000

Server Housing per Unit

- Rittal TS IT 47U 600 x 2200 x 1000

- Rittal TS IT 47U 600 x 2200 x 1200

Tower Housing

- Placement of the device on the shelf for tower housing

- One shelf / 24 pcs tower

Rack Housing at DC Brno

Full Rack

- Rittal TS IT 47U 600 x 2200 x 1000

- Rittal TS IT 47U 600 x 2200 x 1200

- Rittal TS IT 47U 800 x 2200 x 1000

- Rittal TS IT 47U 800 x 2200 x 1200

Server Housing per Unit

- Rittal TS IT 47U 600 x 2200 x 1000

- Rittal TS IT 47U 600 x 2200 x 1200

Tower Housing

- Placement of the device on the shelf for tower housing

- Six shelves / 24 pcs tower

Transport of Devices to the Data Centres

Both data centres are located near main roads, so it is easy to get there by car and public transport. Parking spaces are also available at the data centre buildings. In addition, there is a ramp and pallet truck available on-site.

Furthermore, a local KVM console can be used for on-site work with the technology. However, placement of the device is done in cooperation with support or technical department staff.

- Prague Data Centre – If you are driving, the entrance to the data centre is from Kavkazska Street. Drive directly to the loading ramp at the entrance of the DC. There are also reserved parking spaces (Nos 6 – 10) located inside the roofed courtyard.

- Brno Data Centre – Located near the city centre, with entrance from Cejl Street. Parking spaces are reserved right next to the building. In Brno, an elevator is available to carry your technology from the parking lot to datacenter’s room.

Customer Rooms

Customers also have access to a dedicated air-conditioned room inside the data centres, with the option of connecting to our free public Wi-Fi network or wired GE connectivity with DHCP. Customers can use it temporarily to perform interventions on servers located in the data centre (installation, local device management, etc.).

Data Centre Power Supply

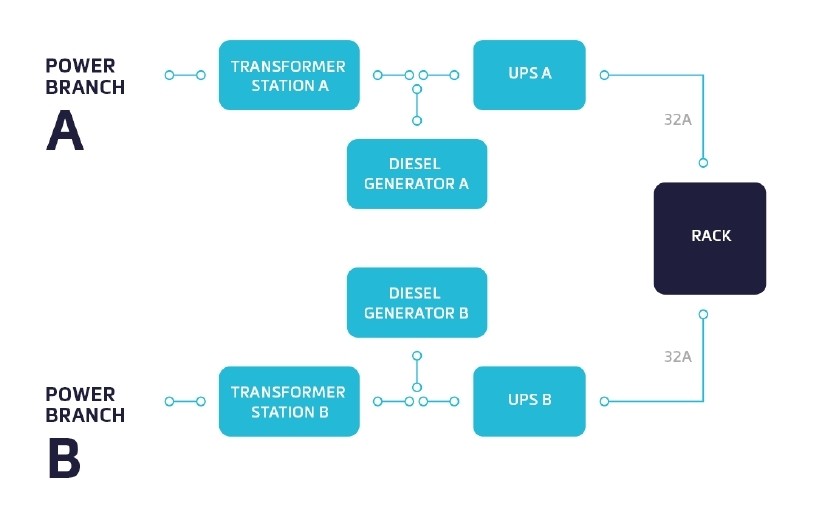

Two independent 230V AC power supply branches, “A” and “B”, provide the power supplies. If a problem occurs on one branch, the other fully functional branch can power your device. The individual power supply branches are physically separated and backed up by a UPS and a motor- generator.

Two high-voltage substations serve as the primary power source for the two branches. One is in operation on each branch. These substations are intended to power the data centre only and are not shared with other entities.

UPS backup power supplies with separate battery modules (Vertiv, APC Symmetra modulator) are also used to overcome short-term power outages. The UPS protects all devices from unexpected network fluctuations, such as voltage dips, spikes, or noise. UPS are online types of power supply.

For long-term power outages or unexpected failures, Redundant 2N+1 motor-generators (Broadcrown Mitsubishi) are automatically put into operation after 40 seconds, which can last at least 24 hours without refuelling. Furthermore, each of the supply branches has a separate group of backup motor generators. The data centres are also equipped with several days’ supply of diesel fuel. In the event of a widespread nationwide power outage, arrangements are made with suppliers to import diesel fuel.

- Power distribution units allow supplying technologies with protection values of 10A, 16A, and 32A.

- Motor generators are tested once a month. As a part of the tests, the entire data centre workload is powered only by the motor generators.

Specification of Power Supply – DC Prague

| Branch A | Branch B | |

| Power Transformer | 1 250 kVA | 1 250 kVA |

| Diesel Generator | 2x 700 kVA load sharing | 1x 1 650 kVA |

| UPS | Online N+1 UPS nest, 4x 200 kVA | 1x Online modular, scalable 500 kVA |

Specification of Power Supply – DC Brno

| Branch A | Branch B | |

| Power Transformer | 1 000 kVA | 1 000 kVA |

| Diesel Generator | 700 kVA + 530 kVA | 2x 700 kVA |

| UPS | 1x Online modular, Scalable 250 kVA, Online UPS, 2x 80 kVA + 2x 40 kVA | 2x Online modular, scalable 150 kVA + 250 kVA, Online UPS, 80 kVA + 2x 40 kVA |

Power Distributon System Diagram

Connectivity

MasterDC’s core network is fully redundant. All network nodes are connected from at least two independent directions. In the event of a failure of one of the lines, traffic is automatically redirected to the backup line. Furthermore, individual networks in the data centres are redundantly connected to the backbone network.

The network is built as an L3 leaf-spine design using 40 and 100 GE. We are connected to the peering centres nix.cz (2x 100 GE Prague), nix.sk (10 GE Bratislava), br-ix (10 GE Brno) and peering.cz (1×10 GE Prague). We consume transit connectivity to abroad from two suppliers Arelion (100 GE Prague) and RETN (100 GE Brno). We also use private peering (PNI) with major providers (Google).

Customer devices in the data centres can be connected using 1, 10, 25, 40, or 100 GE using SFP, SFP+, SFP28, QSFP+, and QSFP28 technologies, alternatively 1 GE. There is also a possibility of interconnecting systems and servers using the customer’s internal network, both on L2 (vlan) or L3 (VRF).

Customers can also use single or dual branch connectivity.

The second connectivity branch supports the following L2 backup configurations:

- L2 – Ports on the MasterDC side are connected to physically separate switches in a single VLAN with BPDUGuard enabled. This wiring is suitable for connecting router ports, such as active-passive bonding servers or firewalls in HA and VRRP on the customer side.

- L2 with STP – The ports on the MasterDC side are connected to physically separate switches in a single VLAN with enabled RTSP with root guard. This wiring is suitable for connecting switches with RTSP on the customer side.

- L2 with LACP – The ports on the MasterDC side are connected to physically separate switches with MLAG. In addition, LACP is enabled on the customer-facing ports. This wiring is suitable for servers with active-active bonding or switches with LACP support on the customer side.

The second connectivity branch also supports L3 solutions using:

- Static routing and VRRP.

- Dynamic routing with mesh, BGP, and private AS on the customer site.

Cooling System

Vertiv (former Liebert) or Stulz precision air conditioning units (CCU) with an output of up to 100 kW in N+1 mode are in operation. In the event of a failure or maintenance of one of the air conditioning units, its operation is covered by the others. The control works on the principle of connecting and disconnecting cooling circuits and is controlled electronically according to the heat load.

In addition, the compressor circuit uses R407c refrigerant. In the case of Stulz free cooling units, the glycol circuit with pump is connected via a pressure pipe to Güntner outdoor dry coolers with an output of 150 kW. Furthermore, the direct evaporation units use outdoor condensers.

A double floor system and cold/hot aisles are also installed in the datacenter rooms. The cold aisles are isolated, and the AC units blow air under the floor to enter the isolated cold aisles through the perforated floor. The devices in the racks heat the cold air, which is then absorbed by the AC unit for cooling again.

- All unused positions of the racks are covered to prevent the mixing of warm and cold air.

- The temperature in the cold aisles is between 22 and 25°C.

- The humidity in the datacenter rooms is between 40–60%.